It's true that some of the industry is using a fusion approach, but Mobileye is on a slightly different path. This article from Mobileye discusses the role of cameras vs. radar and LiDAR:

A differentiated approach for bringing the cutting edge of tomorrow’s self-driving technology to the market today, with safety and scalability at the core.

www.mobileye.com

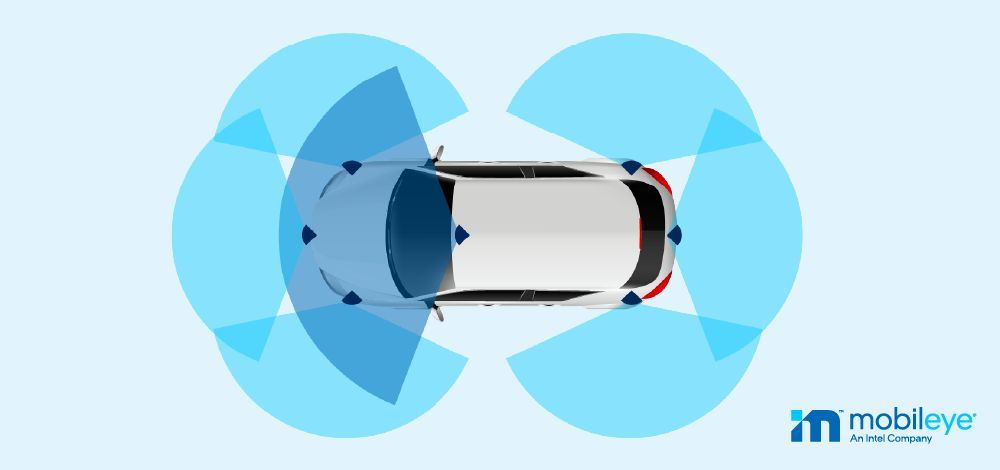

"From the outset, Mobileye’s philosophy has been that if a human can drive a car based on vision alone – so can a computer. Meaning, cameras are critical to allow an automated system to reach human-level perception/actuation: there is an abundant amount of information (explicit and implicit) that only camera sensors with full 360 degree coverage can extract, making it the backbone of any automotive sensing suite . . . . While other sensors such as radar and LiDAR may provide redundancy for object detection –

the camera is the only real-time sensor for driving path geometry and other static scene semantics (such as traffic signs, on-road markings, etc.)." [my emphasis]

A more recent article explains that, as they move up the ladder into Level 3 and Level 4 autonomy, they're now also using lidar and radar as primary sensors, but they are still running those data streams separately from camera inputs to create redundancy:

Mobileye revolutionized camera-based computer vision. Now we’re doing the same with the development of our own cutting-edge radar and LiDAR sensors.

www.mobileye.com

"Mobileye’s differentiated approach of

True Redundancy creates two parallel AV sub-systems, with

two independent models of the driving environment – one from cameras, one from radar and LiDAR –

each operating independently of the other." [my emphasis]

Dr. Eugene Lee, Lucid's head of ADAS, was the primary force behind GM's Super Cruise system. While that system uses LiDAR, it's used for digital mapping but is not part of the real-time data stream:

"Similar to other assisted driving systems, the Cadillac’s gathers real-time data from cameras, GPS, and radars. But Super Cruise adds another element with LiDAR-scanned map data. By precision mapping controlled-access highways (e.g., divided freeways that require on- and off-ramps), Cadillac Super Cruise is beneficial on long-distance trips because factors like intersections are eliminated from the equation." (source:

https://www.jdpower.com/cars/shopping-guides/how-does-cadillac-super-cruise-work)

Lucid is apparently not going to give out too much detail about their ADAS system, but we know they worked first with Mobileye and now have the father of Super Cruise leading their effort . . . and neither of those systems fuse LiDAR-generated data with camera-generated data. This isn't a definitive answer to the question of how Lucid uses LiDAR data, but it's all we've got to go on until Lucid reveals more.